Catalog Integration Snowflake

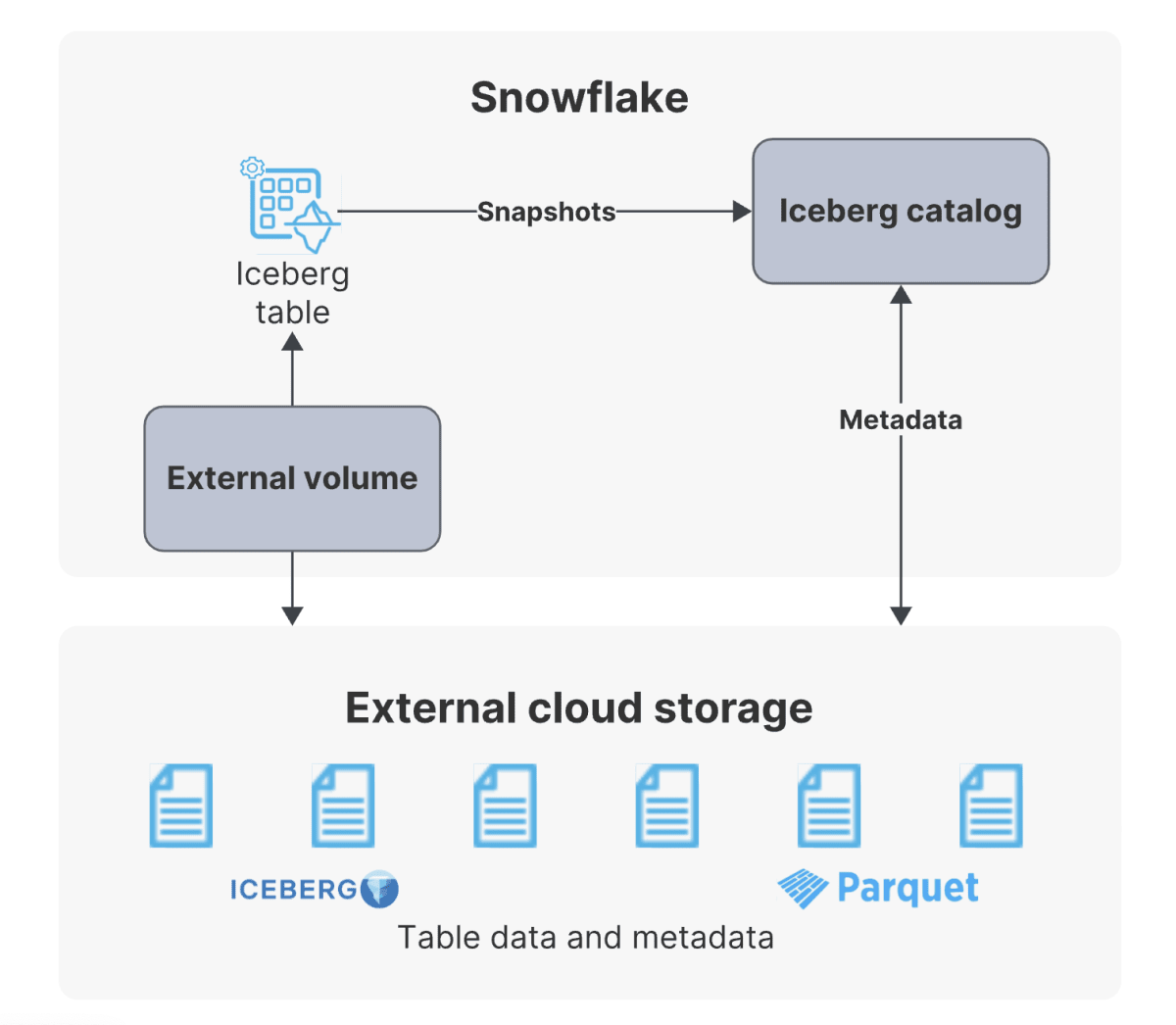

Catalog Integration Snowflake - Snowflake open catalog is a catalog implementation for apache iceberg™ tables and is built on the open source apache iceberg™ rest protocol. The output returns integration metadata and properties. Creates a new catalog integration for apache iceberg™ tables in the account or replaces an existing catalog integration. Create catalog integration (apache iceberg™ rest)¶ creates a new catalog integration in the account or replaces an existing catalog integration for apache iceberg™. Creates a new catalog integration in the account or replaces an existing catalog integration for the following sources: Query parameter to filter the command output by resource name. Successfully accepted the request, but it is not completed yet. A snowflake ↗ account with the necessary privileges to. Create an r2 api token with both r2 and data catalog permissions. A single catalog integration can support one or more. There are 3 steps to creating a rest catalog integration in snowflake: When to implement clustering keys. A snowflake ↗ account with the necessary privileges to. In addition to sql, you can also use other interfaces, such as snowflake rest. Snowflake open catalog is a managed. Create catalog integration¶ creates a new catalog integration for apache iceberg™ tables in the account or replaces an existing catalog integration. To connect to your snowflake database using lakehouse federation, you must create the following in your databricks unity catalog metastore: Creates a new catalog integration in the account or replaces an existing catalog integration for the following sources: Creates a new catalog integration for apache iceberg™ tables that integrate with snowflake open catalog in the account or replaces an existing catalog integration. The syntax of the command depends on. Create an r2 api token with both r2 and data catalog permissions. The syntax of the command depends on the type of external. Lists the catalog integrations in your account. The output returns integration metadata and properties. In addition to sql, you can also use other interfaces, such as snowflake rest. In addition to sql, you can also use other interfaces, such as snowflake rest. String that specifies the identifier (name) for the catalog integration; The output returns integration metadata and properties. There are 3 steps to creating a rest catalog integration in snowflake: Create catalog integration¶ creates a new catalog integration for apache iceberg™ tables in the account or replaces. Create an r2 api token with both r2 and data catalog permissions. Creates a new catalog integration in the account or replaces an existing catalog integration for the following sources: Creates a new catalog integration for apache iceberg™ tables that integrate with snowflake open catalog in the account or replaces an existing catalog integration. Successfully accepted the request, but it. A single catalog integration can support one or more. String that specifies the identifier (name) for the catalog integration; Apache iceberg™ metadata files delta table files Create catalog integration¶ creates a new catalog integration for apache iceberg™ tables in the account or replaces an existing catalog integration. Snowflake tables with extensive amount of data where. A single catalog integration can support one or more. Snowflake open catalog is a managed. Create catalog integration¶ creates a new catalog integration for apache iceberg™ tables in the account or replaces an existing catalog integration. To connect to your snowflake database using lakehouse federation, you must create the following in your databricks unity catalog metastore: Create an r2 api. To connect to your snowflake database using lakehouse federation, you must create the following in your databricks unity catalog metastore: Apache iceberg™ metadata files delta table files The syntax of the command depends on the type of external. Create catalog integration¶ creates a new catalog integration for apache iceberg™ tables in the account or replaces an existing catalog integration. Create. String that specifies the identifier (name) for the catalog integration; とーかみです。 snowflake の iceberg テーブルはテーブルメタデータの自動リフレッシュ機能があります。 カタログ統合を glue data catalog に対して設定し、メタデータの. The syntax of the command depends on. A single catalog integration can support one or more. In addition to sql, you can also use other interfaces, such as snowflake rest. Query parameter to filter the command output by resource name. Create catalog integration (apache iceberg™ rest)¶ creates a new catalog integration in the account or replaces an existing catalog integration for apache iceberg™. Clustering keys are most beneficial in scenarios involving: Creates a new catalog integration for apache iceberg™ tables that integrate with snowflake open catalog in the account or. String that specifies the identifier (name) for the catalog integration; Snowflake open catalog is a catalog implementation for apache iceberg™ tables and is built on the open source apache iceberg™ rest protocol. Successfully accepted the request, but it is not completed yet. Creates a new catalog integration for apache iceberg™ tables that integrate with snowflake open catalog in the account. String that specifies the identifier (name) for the catalog integration; Successfully accepted the request, but it is not completed yet. Snowflake tables with extensive amount of data where. Create an r2 bucket and enable the data catalog. To connect to your snowflake database using lakehouse federation, you must create the following in your databricks unity catalog metastore: Creates a new catalog integration for apache iceberg™ tables that integrate with snowflake open catalog in the account or replaces an existing catalog integration. Create an r2 bucket and enable the data catalog. Creates a new catalog integration in the account or replaces an existing catalog integration for the following sources: The syntax of the command depends on. Apache iceberg™ metadata files delta table files A single catalog integration can support one or more. To connect to your snowflake database using lakehouse federation, you must create the following in your databricks unity catalog metastore: Create an r2 api token with both r2 and data catalog permissions. You can also use this. A single catalog integration can support one or more. Successfully accepted the request, but it is not completed yet. Snowflake open catalog is a managed. When to implement clustering keys. String that specifies the identifier (name) for the catalog integration; Creates a new catalog integration for apache iceberg™ tables in the account or replaces an existing catalog integration. A snowflake ↗ account with the necessary privileges to.How to integrate Databricks with Snowflakemanaged Iceberg Tables by

Simplify Snowflake data loading and processing with AWS Glue DataBrew 1

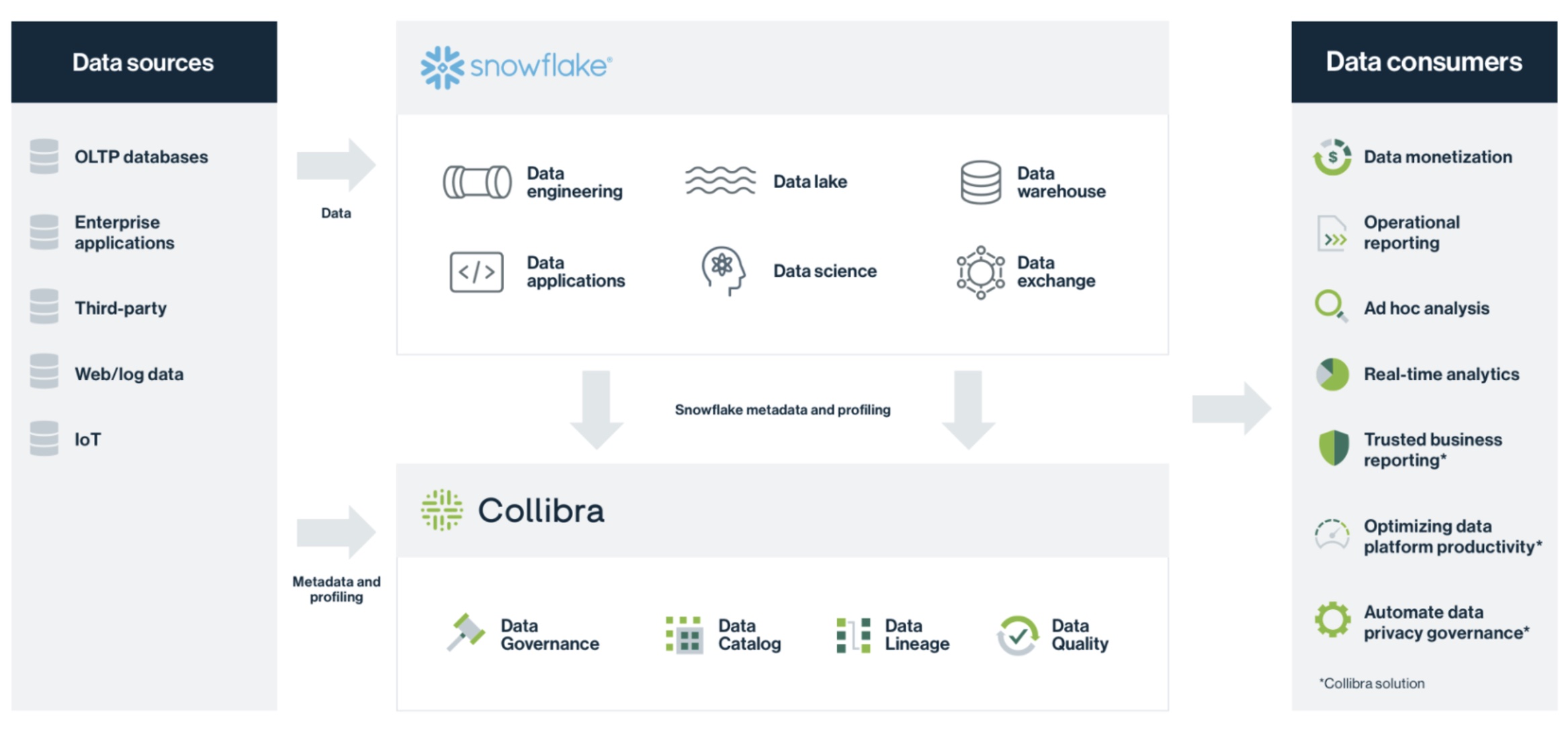

Collibra Data Governance with Snowflake

What you Need to Understand about Snowflake Data Catalog Datameer

AWS Lambda and Snowflake integration + automation using Snowpipe ETL

Integrating Salesforce Data with Snowflake Using Tableau CRM Sync Out

Snowflake新機能: Iceberg Table と Polaris Catalog の仕組み

Snowflake Catalog Integration API Documentation Postman API Network

How to automate externally managed Iceberg Tables with the Snowflake

Snowflake Releases Polaris Catalog Transforming Data Interoperability

Snowflake Tables With Extensive Amount Of Data Where.

Query Parameter To Filter The Command Output By Resource Name.

Lists The Catalog Integrations In Your Account.

とーかみです。 Snowflake の Iceberg テーブルはテーブルメタデータの自動リフレッシュ機能があります。 カタログ統合を Glue Data Catalog に対して設定し、メタデータの.

Related Post: